A Helping Hand or Pandora’s Box?

A revolution in health care through the use of AI seems imminent, but is there enough regard for privacy, safety, and ethics?

*In a nod to AI, this headline was written by Chat GPT after the editor fed it a summary of the article (although the article itself, we assure you, was written by a human.) The other AI-generated headline suggestions: Boundless Potential and Ethical Quandaries; Powerful Ally with Potential Perils; A Balancing Act Between Medical Marvel and Ethical Minefield.

Would anyone want to take a hard look at the possibilities and complexities of AI in medicine?

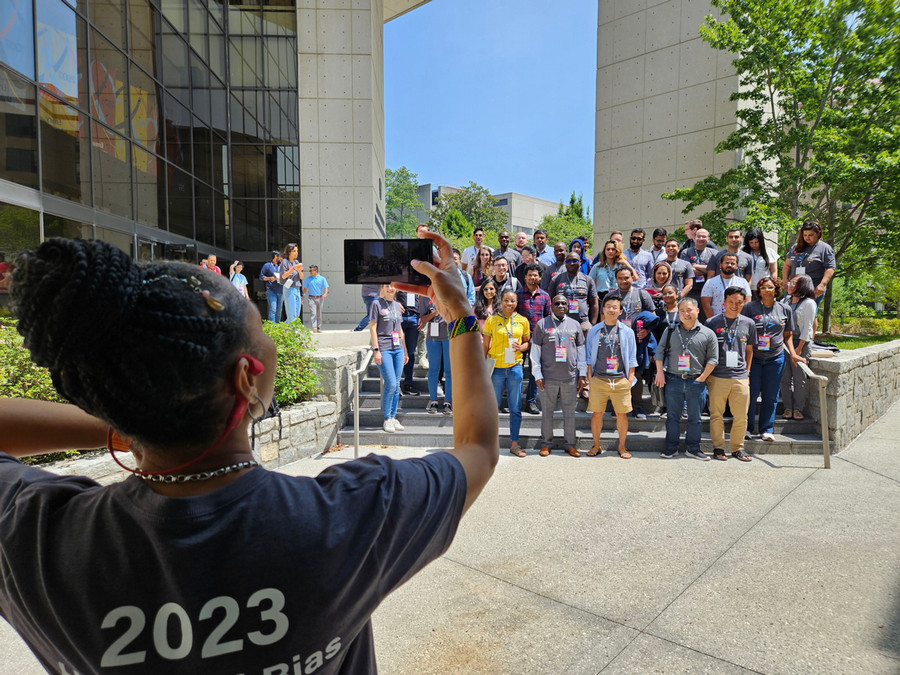

When Judy Gichoya held the first Artificial Intelligence (AI) Bias Datathon at Emory a few months ago, she had no idea who might turn up. Anant Madabhushi harbored similar doubts when he organized Emory's first symposium on AI in Health in November. They needn't have worried.

Gichoya, associate professor of radiology in Emory's School of Medicine, wound up welcoming 132 people to the Emory campus from as far away as Singapore, Uganda, and Brazil to match wits with some of the biggest datasets and most powerful artificial intelligence programs on earth.

And Madabhushi, who leads the newly established Emory Empathetic AI for Health Institute, wondered if he could even fill the 160 seats in the Health Sciences Research Building auditorium. "It turns out," he says, "we had to shut off registration the day before because we topped 450. It was remarkable to see the excitement, the enthusiasm."

This level of enthusiasm for both events shows not only the excitement AI now generates worldwide but also the support for Emory's novel approach. AI in medicine offers the promise of better, more efficient diagnoses, more effective clinical outcomes, and treatments tailored to the individual. It seems a revolution in health care — through the help of AI tools — is not only inevitable but imminent.

A participant of Emory Health Bias Datathon 2023 takes a group photo of fellow attendees, who “dove head-first into the world of cutting-edge data analysis and sizzling insights in the service of creating a more equitable future for all.”

The excitement, however, is tempered with caution. "We've relegated the monitoring of large language models to the people who are building the models," Gichoya says. "We are waiting for Google, Facebook, or Microsoft to do the safety work. I don't think they can do the safety work. That should be our job."

This is why the Emory Empathetic AI for Health Institute (AI.Health), part of the university-wide AI.Humanity initiative, was created. The institute's goal is to break down disciplinary barriers between researchers working in AI in medicine and the humanities, letting them work with common purpose to use the power of machine learning and big data for disease prevention and better patient care while ensuring human elements like empathy, creativity, and ethics are not overlooked.

Provost Ravi Bellamkonda launched the AI.Humanity initiative in 2022 with a dual mission: to help advance and apply AI's vast potential while ensuring the technology is used wisely. In medicine, this includes a focus on promoting more equitable health outcomes.

"I get asked a lot, what is Emory doing in AI?" Bellamkonda says. "We engage because we care about health. We care about social justice, law, entrepreneurship, and the economy. We care about the arts and humanities. We engage because we would like to use the most powerful tools that exist to further these things we are passionate about."

"We are in the middle of an AI revolution," he adds. "Our job as a liberal arts university is to think about what this technology is doing to us."

Carlos del Rio, interim dean of Emory School of Medicine, is convinced that AI will empower researchers designing next-generation drugs, vaccines, and diagnostics, as well as helping to leverage data for value and efficiency in health care. But, he says, "if AI mechanisms and learning bases don't include diverse populations, they will create algorithms that are actually going to increase health disparities."

AI, del Rio says, must be used not only to develop medical advances and innovations, but also to address social determinants of health within communities.

"While we all like genetic medicine, will your genetic code determine what happens to you? It's really your zip code that determines when you're going to die, what diseases you're going to get, what access to health care you're going to have," he says. "My hope is that AI would be one of the tools that helps us address this."

Questions like these recently led the Biden Administration to issue an Executive Order setting broad standards for AI safety, security, and privacy. For the first time, it puts the power of the U.S. government behind efforts to ensure that AI's benefits will work equally well for all individuals and groups.

Science, Medicine, and the Humanities

At Emory, the AI.Humanity initiative will address the same challenges by bringing biomedical scientists and engineers together with scholars in literature, the arts, and the social sciences to provide the kind of creative ferment and insights not always available to science alone. Emory Center for Ethics Director Paul Root Wolpe, Schinazi Distinguished Research Chair of Jewish Bioethics and professor of medicine, is at the center of many of these growing discussions.

Wolpe says many questions remain about how AI will perform on the tough diagnoses even physicians can get wrong. But it's exactly these thorny questions that convinced Emory to structure its AI programs to bring scientists and humanists together. "The humanities help us imagine futures," he says. "And though we never know which of those futures is going to come to pass, the humanities sometimes help us decide the future we want and warn us where the pitfalls are."

Case in point: 2018 was the 200th anniversary of the publication of Frankenstein, Wolpe says, and "200 years later, we're still reading that as an important cautionary tale."

Unlike the broad span of tech-focused AI research elsewhere, humanistic thinking is built into the process at Emory, and for good reason.

"It's critical that as computer scientists and biomedical engineers are developing these algorithms, they're connected to the folks in the humanities thinking about the ethical issues," says Madabhushi, who, in addition to being director of AI.Health, is Woodruff Professor of Biomedical Engineering and a research health scientist at the Atlanta VA Medical Center.

Madabhushi is hands-on with this pioneering research as well. His lab investigates ways to develop AI to improve outcomes for people with cancer and other diseases. "Our group is pioneering AI decision-support tools to aid clinicians in treating patients suffering from cancers including lung, prostate, brain, breast, cervical, ovarian, endometrial, colon, head and neck, and kidney, as well as nononcologic cardiovascular, renal, ophthalmologic, and respiratory diseases," he says.

Indeed, medical science has only scratched the surface of ways AI could be employed as a clinical, research, and even teaching assistant. The tools collectively known as artificial intelligence, including large language models and machine learning, work by using sophisticated pattern recognition programs on data, including, potentially, the large amounts of medical data available in contemporary health care.

In the hands of those who know how to use it, AI can scan medical images and recognize undetected health issues, diagnose symptoms with unparalleled accuracy and consistency, and identify diseases earlier and more efficiently. It can make surprisingly accurate risk predictions that contribute to preventive care. It can help doctors with routine notetaking during patient exams. It can, through machine learning and image processing, read one X-ray or tissue slide — or a thousand — to the same standards and never tire.

It can provide unparalleled support for clinical decisions, such as helping assess which patients need chemo and which do not, lowering the cost of health care and avoiding one-size-fits-all treatments. It can streamline appointment scheduling and reimbursements and improve quality and patient safety outcomes. It can identify and track disease outbreaks and monitor mitigation strategies.

Indeed, a simple search of the terms "AI" and "medicine" results in a seemingly endless list of uses in all areas of patient care, diagnostics, education, drug development, imaging, infectious disease, medical statistics, and health care operations.

Could AI Find Your Cancer? Watch Anant Madabhushi's TEDx Atlanta talk

Real-World Applications

Some of those uses are already here. Gari Clifford, chair of Emory biomedical informatics, collaborated with his wife, Emory anthropologist Rachel Hall-Clifford, Indigenous Mayan midwives, and a Guatemalan nongovernmental organization (NGO) to co-design AI-based devices the midwives can use to address a range of problems for pregnant women, including fetal growth restriction. About half of Indigenous Mayan women deliver at home with the help of midwives who may be low literacy and have few resources to screen for complications.

Clifford, who trained in physics and engineering, spent a decade working with Hall-Clifford and Indigenous populations to gather data with the midwives, searching for technological improvements that would make a meaningful difference in the lives of patients. Using this co-design approach, the team developed an AI algorithm accurate enough to identify fetal growth restrictions, using an inexpensive device plugged into a low-cost smartphone. The tool allowed the midwives to spot and record abnormalities in pregnant women.

"This is where AI meets medical anthropology," Clifford says. "We partner with these communities and NGOs to work out if and how AI-driven technology can provide assistive diagnostics and referrals." Clifford says this tool, through the safe+natal program, was able to drive maternal mortality down dramatically.

Other AI capabilities may make it possible to custom-tailor treatments, avoiding unnecessary care and delivering health care with greater precision. "How can AI be used to impact health disparities?" Madabhushi asks. "How can we use these tools to learn subtle differences in the disease appearance among different populations, between Black patients and white patients, for instance? Can that allow us to create more precise, population-specific tools that will be more accurate in Black patients? We've seen already that a lot of the tools that we have simply don't work with minority patients in underrepresented populations."

As AI develops in medicine, it also raises new and baffling problems, especially around the issue of which data the programs use to recognize patterns to train on. The medical profession is beginning to recognize that data is created by humans who must make decisions about which population will be studied, what categories patients will be divided into, how to define the categories, and what counts as data rather than mere noise.

In practice, this means that while medical databases don't necessarily exhibit overt bias, it can still be there in subtle ways.

"We know that CT scanners or MRI scanners can be very different across different hospitals," Madabhushi says. "And we know the differences in the imaging then have an impact on the AI. So we really need to think about being very intentional and trying to ensure that we're not limiting the training of the AI algorithm to one specific site or group of patients, instead trying to inject as much diversity as we can."

"We've seen all these studies that prove multimodal data works. Why are we not using this currently? One reason is the barriers to large-scale data," says Marly van Assen, assistant professor of radiology and imaging sciences at Emory School of Medicine, whose research focuses on AI in cardiac imaging. "Getting large databases is hard. There's a lot of missing data on patients. Patients move around, not a lot of data is actively recorded. A lot of the patients that we're interested in don't necessarily show up at the imaging exam. And those are the patients that would be very interesting if they have the worst outcomes. But because they show atypical complaints, they don't necessarily always get referred to imaging."

Finding Hidden Biases

Bias was very much on the minds of attendees at the AI Bias Datathon, which Gichoya created as an invitation and challenge to experts and anyone else who cared about the future of AI.

Along with eating, dancing, and socializing, the datathon focused on a serious question: Could the students and AI experts who came from around the world use their skills to find hidden biases potentially lurking in medical data? Attendees gathered in the medical school auditorium where they joined teams with each assigned to a different AI application. All teams had the weekend to question, test, and deeply investigate their assigned applications to find hidden flaws lurking within.

Don't work on something you're familiar with, Gichoya cautioned. But don't be so ambitious in your choice that you wind up frustrated. Focus on what you can learn.

One team was tasked with investigating a database of knee X-rays that showed a disparity in pain perception between Black patients and white patients. Among other things, they investigated whether this was due to the pain scale, originally developed 70 years ago in England on white male patients.

Could AI have discovered some hidden racial difference in pain perception that escaped traditional expertise? To test that, they also interrogated an Emory-created database with a more diverse population.

Another team was assigned to investigate the popular online model Chat GPT. Although this isn't considered a scientific program, Gichoya still found value in asking one team to test how it works. "I did not start off with a specific intent," she says. "Whatever we cannot answer today will either be used as research for the next few months, or we'll be ready for it next year."

Team 13 did what hackers call "red teaming," a coders' term for an officially authorized attack to determine how they could fool AI into creating potentially harmful outputs, starting with a simple negative review of a doctor visit.

Gari Clifford, chair of Emory biomedical informatics and professor of biomedical engineering at Georgia Tech, invents ways to apply AI to health care.

These challenges are also true of the more sophisticated AI models. Last year, Gichoya, a radiologist by training, investigated how well deep learning models could detect the race of a patient simply from studying their chest X-rays. Inexplicably, her research team found deep learning models can be trained to predict race with up to 99 percent accuracy, using no information but the X-ray itself. The models could also predict race on other anatomical images and on deliberately degraded images, even when clinical experts could not. "I can show you that deep learning models may not know that this is Judy, but they can say Judy is female and Black," she says.

How is that possible? Gichoya's team investigated whether the models were measuring some kind of proxy for race such as body mass but couldn't find one. She thinks this mystery could have unexpected benefits for diagnoses that escape even trained clinicians.

"These hidden signals hold tremendous promise," she says. "They are saying, ‘I am seeing things that radiologists cannot see, that other doctors cannot see.' The hidden potential is that on this one X-ray, we could say, look, this patient has diabetes. The only problem, as of today, is we cannot tell why."

Gichoya believes the challenge is to figure out how to find medical uses for the things this model can do, even if its creators don't understand how. Is that a bonus or a bug? Those connected with AI at Emory recognize that such power in a machine raises disturbing ethical issues about who controls them and for what purposes.

"AI scares people," Clifford says. "When you have a very large model with billions of free parameters in it, it's very difficult to imagine all the things that it's going to possibly do. And I think people have a fear of that."

Concerns like these are part of why AI.Humanity was created.

Gichoya has introduced her students to this kind of dilemma, teaching about real-world sepsis models that have diagnosed patients in unexpected ways. She says it's possible to think you understand a model, then have it take you by surprise in actual performance.

"This is the fight between explainability versus transparency," she says. But, Gichoya says she is willing to use models that may have biases against some subsets of the population as long as everyone understands that's how they work.

"This race to look for the perfect, ultimate model that is not biased is, today, impractical," she says. "But I do think we need to have at least some basic recipe for transparency. We need to know when things don't work right."

Adding Education to the Mix

The AI.Humanity initiative includes a strong educational component through the Emory Center for AI Learning, to give everyone — students, faculty, and staff — opportunities to obtain basic literacy in AI or more advanced skills if they desire.

As part of this commitment, Emory is hiring up to 60 new faculty to apply their AI expertise to fields ranging from biology to medicine to physics to law.

The center offers short courses and workshops on topics ranging from introductory AI basics to finding ways to apply AI to specific user objectives to advanced topics such as high-performance cloud computing and sophisticated neural networks.

Center Director Joe Sutherland, also a visiting assistant professor in QTM, worked as a technology executive before becoming an educator. In the past, he's cautioned about the dangers of overhyping AI, while introducing business executives to the substance behind the faddishness.

"Emory started thinking about crafting this AI.Humanity initiative and well-rounded artificial intelligence programs that are not just about applying a method to data, not just about the user needs or ethics implicated, but all of it together," Sutherland says. "The key is, how do we marshal the wonderful intellectual resources at this university for the betterment of society and humanity?

"I see artificial intelligence as the electricity that's going to empower all of these things and help to scale them out. We're going to develop deeper insights faster than before, enabling us to better orient to the future.

"If you're about to graduate and go into the market, you need to be able to speak intelligently about AI and what it means for you and your peers."

A student in Sutherland's class, Zaim Zibran, says the course offered a chance to explore his area of passion in addition to data fundamentals.

"We began with asking, what are some problems in the world that need to be solved? And from that, how can we reverse-engineer the AI tools needed to reach our solution? AI is and always will be a means to an end, never an end in itself."